(that's also why I didn't blog much these last months)

We worked hard on it, but thanks to Murphy's law, we had a hard time two weeks before the go-live.

It started with the first user-testing week : everything was running nice & smooth but in the last minute (quite unbelievable),

we ended up with a blocking exception, which forced us to use some workaround techniques.

The exception was raised when a JMS message was sent from WLS to ALSB.

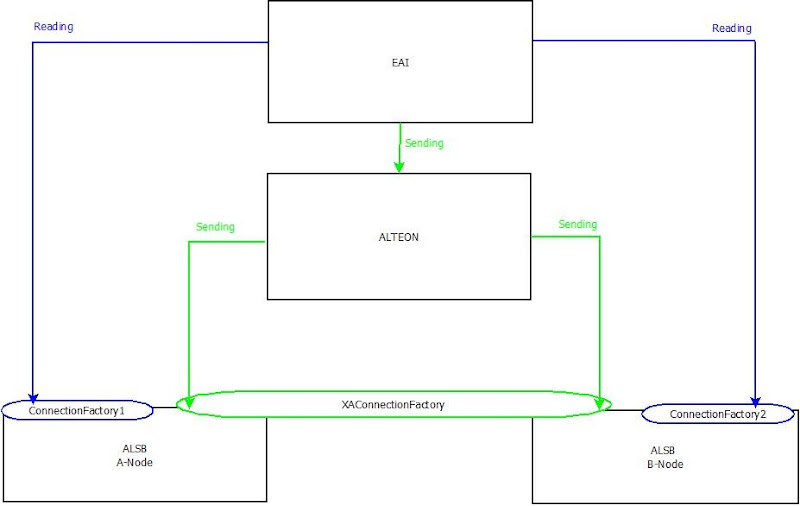

Here’s our architecture (sorry for the ugly stuff : at least, I know I will never be a web designer !)

* In green is the “Send” mechanism : when WebMethods wants to send a message to our system,

it first contacts the Alteon, which load-balances the request (with a server affinity) to a node,

then looks up in the JNDI tree for a connection factory,

which load-balances in its turn between the two nodes.

* In blue is the other mechanism, used to receive messages from our system.

As we use the same connection factory,

we have almost no guarantee to know where the messages are going to land.

We could have used the “message forward” system, embodied by the option “Forward Delay”,

but our system is particular : consumers are only consuming messages based on a JMS header,

so we could have a consumer connected but no one reading the messages.

(that’s why “Forward Delay” is useless in our case)

Then we had to have all the connectors doubled, to have both nodes served.

And to ensure a proper connection on each node, we had to create a connection factory on each.

Normally, when a message arrives, ALSB tries to persist the message into database (to be able to restore the message in case of crash).

During that normally basic operation, we had the following stacktrace :

Caused by: weblogic.messaging.kernel.KernelException: Error persisting message ... 9 more Caused by: weblogic.store.PersistentStoreFatalException: [Store:280065]java.sql.SQLException: ORA-01098:

erreur d'interface de programme lors d'une insertion longue ORA-12155: TNS : type de données incorrect dans le paquet MSWMARKER reçu (server="ALSB2a-01-COCESB" store="JDBCStore-0" table="jms_alsb1WLStore"):(Linked Cause,

"java.sql.SQLException: ORA-01098: erreur d'interface de programme lors d'une insertion longue ORA-12155: TNS : type de données incorrect dans le paquet MSWMARKER reçu ") at weblogic.store.io.jdbc.JDBCStoreIO.flush(JDBCStoreIO.java:1405) at weblogic.store.internal.PersistentStoreImpl.run(PersistentStoreImpl.java:640) at java.lang.Thread.run(Thread.java:595)

And we were able to reproduce it everytime on the A-Node!

As we could not really understand why it happened, we decided to cut that communication by switching to a filestore.

It was clear that this solution was a poor workaround since we didn't understand the conditions in which that exception occurred.

We spent three whole days trying to figure out why and we reached a partial conclusion : it happens only on the A-Node, with a JDBCStore.

With the filestore configured, everything seemed to be ok and we started to think this problem was behind us ... we were unfortunately mistaken ...

A week after that, while WebMethods was retrieving a JMS message from the bus, we noticed that it was reaaally slow.

And finally, we had an exception :

java.lang.Exception: weblogic.jms.common.JMSException: weblogic.messaging.dispatcher.DispatcherException:weblogic.rjvm.PeerGoneException: ; nested exception is: java.io.IOException:A complete message could not be read on socket:'weblogic.rjvm.t3.MuxableSocketT3@1f42269:Socket[addr=/10.203.3.143,port=9101,localport=57568]',in the configured timeout period of '60' secs

The JMS message was approximatively 3Mb but we already passed the test with a 15Mb message. Thus, the message size was not involved here.

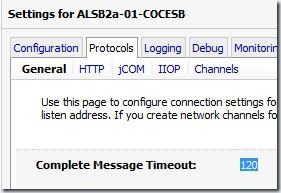

We first thought that it was a temporary network problem (latency) and then we tried first to increase a timeout value,

embodied by the option "Complete Message Timeout"

We shutted / re-started our servers and the first test passed. After several other tests, the exception showed up again, with the same value.

I read that sometimes this option had to be set thanks to a JVM property. (-Dweblogic.CompleteMessageTimeout).

Then we tried that, and after a restart, and a few retries, the exception raised again.

We also changed some options from the WebMethod side, but nothing changed.

We then tried on another environment (prod mirror) and the message was consumed in less than five seconds.

We switched the prod EAI toward the second environment : everything went fine too.

Then we tried on the second node of the ALSB cluster in our production environment, B-Node and the attempt was ok as well (= fast consumed).

From those two exceptions (outgoing communications with the database and the EAI), we started to question the network configuration,

since every outgoing communication presented a problem on that node, which is on machine A.

We used the netstat command to get some information and we noticed something interesting :

the number of collisions on machine A was abnormal because far too high.

Machine A netstat -ni Name Mtu Net/Dest Address Ipkts Ierrs Opkts Oerrs Collis Queue lo0 8232 127.0.0.0 127.0.0.1 17943151 0 17943151 0 0 0 e1000g0 1500 10.203.3.0 10.203.3.141 880127761 0 385849067 80 6141032 0 e1000g1 1500 10.203.72.0 10.203.72.28 60799620 0 199220059 0 0 0 e1000g4 1500 10.203.3.0 10.203.3.142 537705356 1651 8356636 0 0 0 Machine B netstat -ni Name Mtu Net/Dest Address Ipkts Ierrs Opkts Oerrs Collis Queue lo0 8232 127.0.0.0 127.0.0.1 1457911 0 1457911 0 0 0 e1000g0 1500 10.203.3.0 10.203.3.144 857275091 0 380178757 0 0 0 e1000g1 1500 10.203.72.0 10.203.72.29 50600947 0 158826403 0 0 0 e1000g4 1500 10.203.3.0 10.203.3.145 537677014 2344 8336692 0 0 0

We didn't have the rights to execute the command "ndd" so we asked our hosting provider to do so and then he came up with something :

After some talks, a guy from the hosting provider came up with an interesting fact :

"e1000g0 is configured in 100/HALF instead of 100/FULL"

In a word, the network card is in half-duplex whereas it should have been in full-duplex.

If you are unfamiliar with those terms, just think about a one-way road with a traffic light,

where a way can only pass at one at a time : this is half duplex.

With full-duplex, you have a two-way road, with no traffic light ...

You can imagine which one is better in terms of fluidity, can't you ?

To confirm that our problems came from that configuration,

we tried one last time to send a JMS message and have it consumed by the EAI and the result was pretty clear :

the message was consumed as fast as on the other platforms ...

We have not tested the JDBCStore yet, but we will in the next few weeks.

As a conclusion, I would say that no matter how skilled you are in your field of expertise, it is not enough.

You have to know more about the greater perimeter and be able to see the big picture to be more efficient.

(work especially on the network aspect ! :p)